Theory and Methods for Inference in Multi-armed Bandit Problems

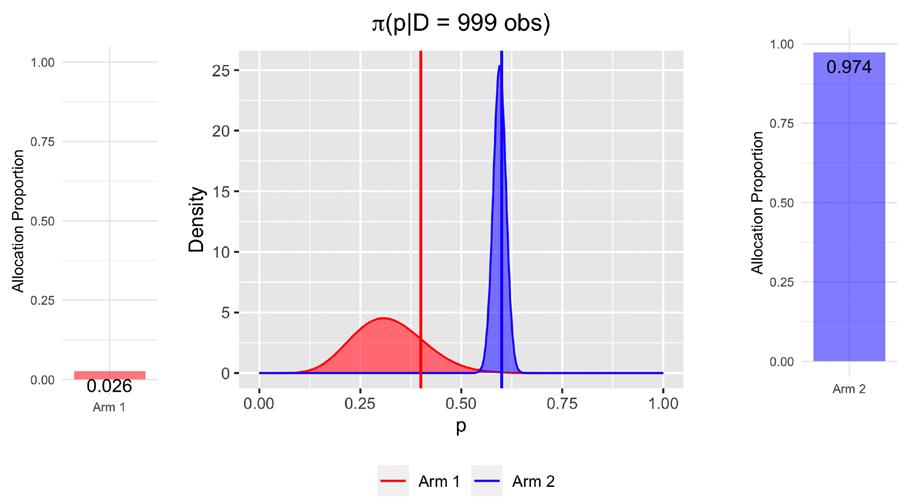

Multi-armed bandit (MAB) algorithms have been argued for decades as useful to conduct adaptively-randomized experiments. By skewing the allocation of the arms towards the more efficient or informative ones, they have the potential to enhance participants’ welfare, while resulting in a more flexible, efficient, and ethical alternative compared to traditional fixed studies. However, such allocation strategies complicate the problem of statistical inference. It is now recognized that traditional inference methods are typically not valid when used in MAB-collected data, leading to considerable biases in classical estimators and other relevant issues in hypothesis testing problems.

When & Where:

- Wednesday, May 11th, 7:00 PT / 10:00 EST / 16:00 CET.

- Online, via Zoom. The registration form is available here.

Speakers:

- Anand Kalvit, Columbia University: “A Closer Look at the Worst-case Behavior of Multi-armed Bandit Algorithms”

- Aaditya Ramdas, Carnegie Mellon University: “Safe, Anytime-Valid Inference in the face of 3 sources of bias in bandit data analysis”

- Ruohan Zhan, Stanford University: “Inference on Adaptively Collected Data”

Discussant:

- Prof. Assaf Zeevi, Columbia University

The webinar is part of YoungStatS project of the Young Statisticians Europe initiative (FENStatS) supported by the Bernoulli Society for Mathematical Statistics and Probability and the Institute of Mathematical Statistics (IMS).

If you missed this webinar, you can watch the recording on our YouTube channel.